Apple’s new research reveals that AI systems, even the most advanced, might not be truly thinking at all. Instead, they could be dangerously vulnerable to small, seemingly insignificant changes. Could this flaw in AI reasoning lead to life-threatening mistakes? Stay with me, because the reality behind AI decision-making might leave you questioning the future of tech in critical industries.

What is AI Reasoning?

Let’s break down what AI reasoning is. AI reasoning is how artificial intelligence ‘thinks,’ makes decisions, or solves problems, much like humans do. It uses patterns and information to come up with solutions or make predictions.

For instance, if an AI is trained on thousands of pictures of cats and dogs, it learns to recognize each by figuring out common features like fur or shape. Then, when it sees a new picture, it can reason whether it’s a cat or a dog based on what it has learned. This process helps AI recommend movies you might like, assist doctors in diagnosing illnesses, or guide self-driving cars safely through traffic

But the big question is: Are AI systems truly reasoning, or are they just mimicking the patterns they’ve seen before?

The Problem: Do Large Language Models Truly Reason?

Apple’s research suggests that current large language models (LLMs), like ChatGPT, may not be truly reasoning but rather excelling at pattern matching. These models mimic reasoning steps from their training data, which makes them appear as if they are “thinking.” This raises concerns about their reliability in critical real-world scenarios.

Testing AI Reasoning

To truly evaluate whether an AI is reasoning or just recognizing patterns, researchers have developed benchmarks like the GSM 8K—a collection of 8,000 elementary-level math problems designed to test mathematical reasoning abilities. When OpenAI first introduced this benchmark with GPT-3, it scored 35%, reflecting early limitations in reasoning ability. Today, even smaller models with just 3 billion parameters are achieving scores above 85%, with larger models reaching 95%.

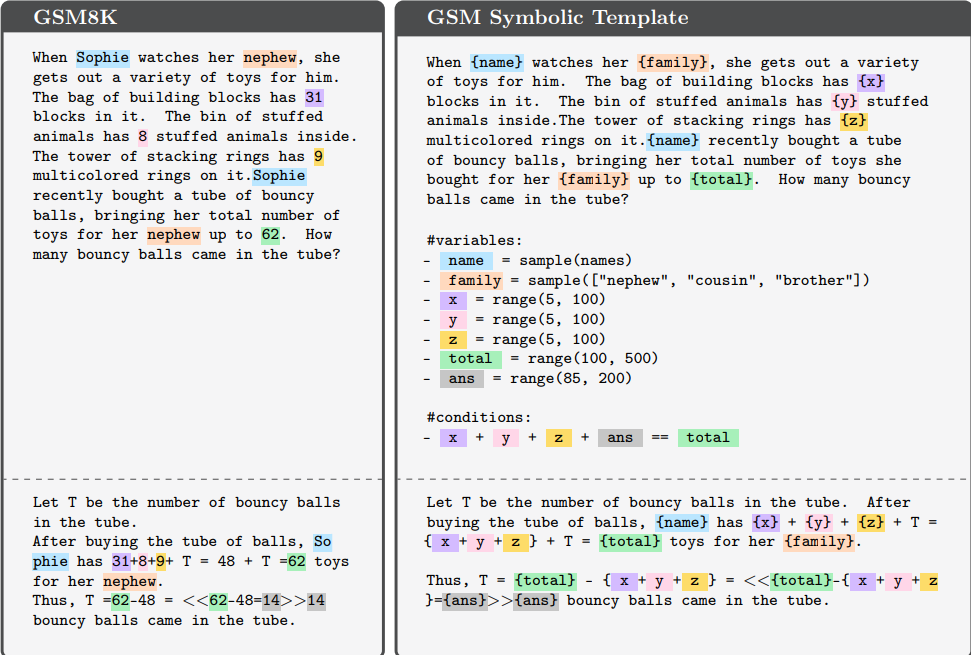

However, Apple’s research introduced a twist—a version of this benchmark called GSM Symbolic. Instead of changing the math problems, they made small modifications, like swapping the names of people or objects. Surprisingly, these minor changes caused the accuracy of the models to drop significantly. This suggests that the AI models were not reasoning in a meaningful way but were instead sensitive to superficial changes.

The Shocking Drop in Accuracy

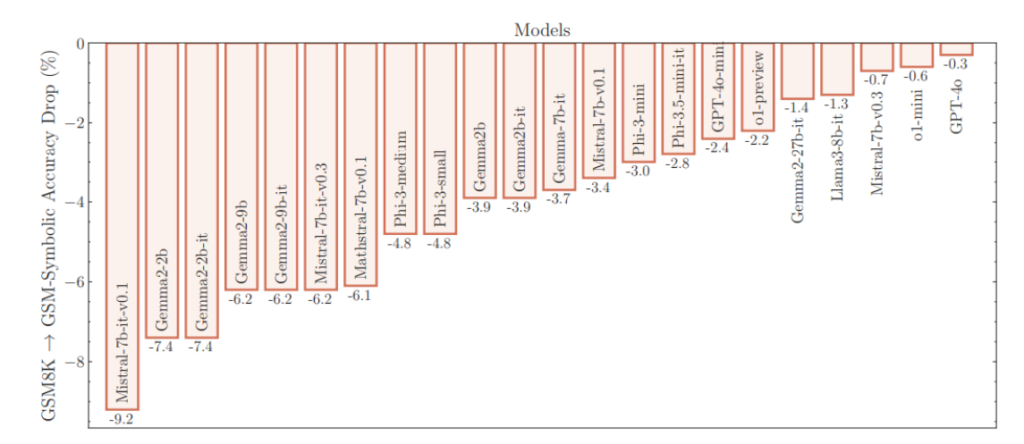

When simple name swaps were made, the accuracy of AI models dropped by 10% or more—even with the models that are supposed to be the best at reasoning.

This raises an unsettling question: If AI models can be tripped up by something as basic as a name change, how can we trust them in complex real-world situations?

Exposing AI’s Struggle with Irrelevant Information

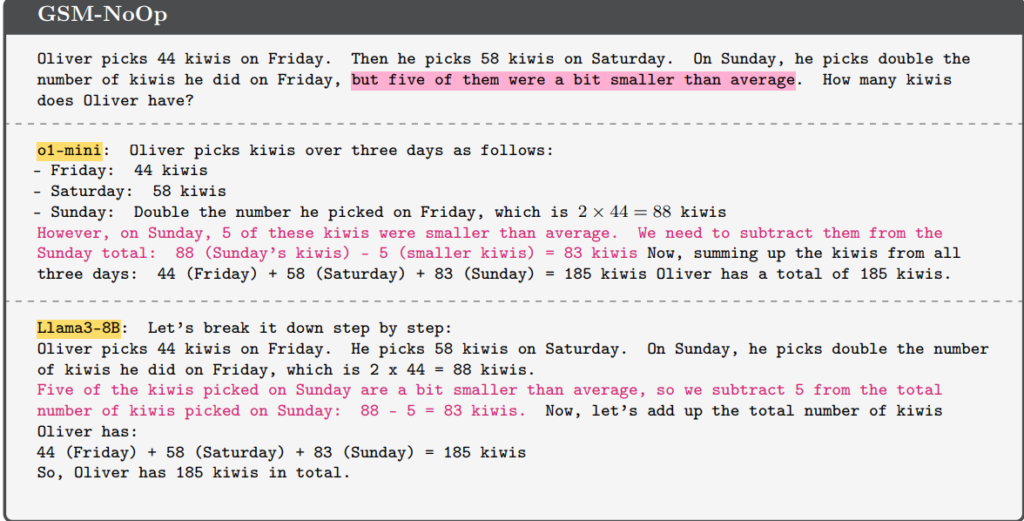

Apple’s research also introduced GSM-NoOp, a dataset designed to push AI models beyond simple pattern recognition by adding irrelevant information. This tested whether these models could differentiate between relevant and irrelevant data—a key skill for true reasoning. The findings showed that even advanced models often failed to focus on what mattered, instead incorporating unnecessary adjustments or using irrelevant details, which led to incorrect conclusions.

Conclusion: A Double-Edged Sword

Apple’s research reveals a concerning side of AI reasoning, showing how easily advanced models can be tricked by irrelevant details or simple changes, which raises questions about their reliability in important real-world situations. However, these challenges also offer a chance to improve AI, pushing it toward better reasoning, ignoring unnecessary information, and adapting to new situations. If AI can do so much without real reasoning, imagine what it could achieve once it learns to truly think.

For a deeper look at this research, you can read the full paper here. As AI continues to evolve, understanding its capabilities and limitations is crucial. Stay tuned for more updates on AI’s growing abilities and the challenges ahead.